|

Hi, I'm Nick! I am a PhD student at IMT Lucca in the DYSCO lab, supervised by Mario Zanon and Alberto Bemporad. I design practical second-order methods for large-scale deep learning, with an emphasis on Gauss-Newton curvature, matrix-free solvers, and JAX-native implementations. Prior to starting my PhD, I worked on autonomous delivery robots at Starship Technologies and risk modeling at CompatibL. Email / Google Scholar / GitHub / LinkedIn |

|

|

I work on making second-order optimization practical at scale. My research develops efficient Gauss-Newton curvature approximations and matrix-free solvers that reduce compute and memory overhead, with a focus on diagonal/low-rank structure and multi-class objectives. |

|

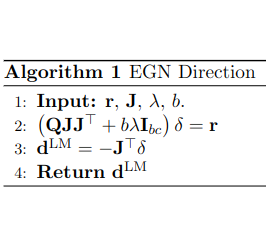

Mikalai Korbit, Adeyemi D. Adeoye, Alberto Bemporad, Mario Zanon Neurocomputing, 2025 code / arXiv We propose EGN, a stochastic second-order optimizer that computes Gauss-Newton descent directions by solving the low-rank Gauss-Newton system exactly. |

|

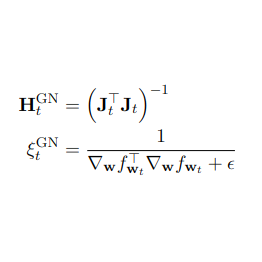

Mikalai Korbit, Mario Zanon Under Review code / arXiv We propose IGND, a scale-invariant, easy-to-tune, fast-converging stochastic optimization algorithm based on approximate second-order information with nearly the same per-iteration complexity as Stochastic Gradient Descent. |

|

I develop and maintain somax, an open-source JAX library for stochastic second-order optimization, with matrix-free curvature operators, practical damping/preconditioning, and end-to-end training utilities. |

|

Mikalai Korbit code / arXiv Somax is a library of stochastic second-order methods for machine learning optimization written in JAX. Somax is based on the JAXopt StochasticSolver API, and can be used as a drop-in replacement for JAXopt as well as Optax solvers. |

|

|

|

|

|

Kudos to Jon Barron for this template! |